Google Magnifier — Accessibility Research

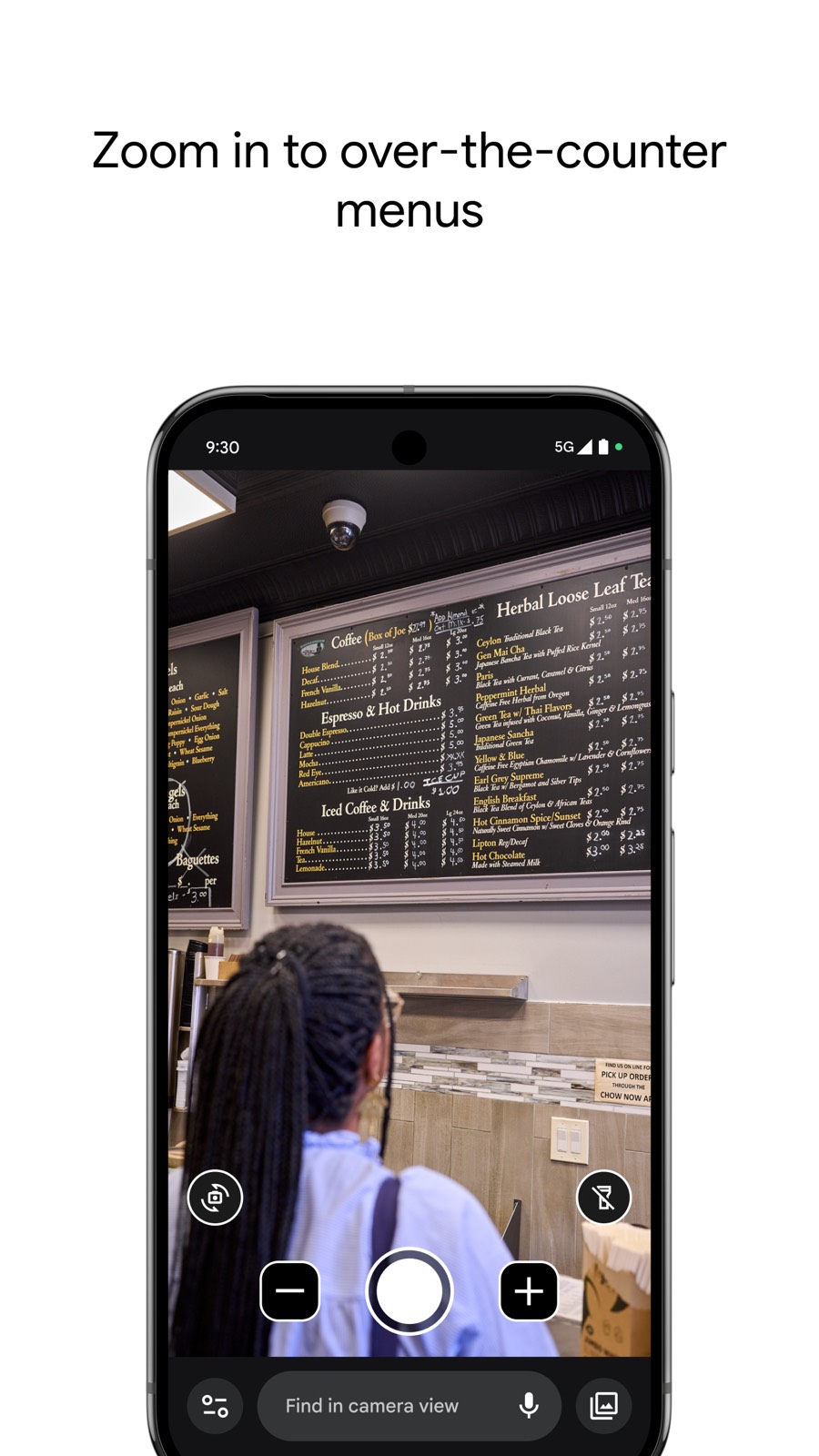

Google Magnifier is a lightweight assistive app that uses the device camera to magnify nearby text and objects. This heuristic evaluation focused on accessibility-first patterns, discoverability of features, and how the app supports quick, on-the-go tasks for users with low vision.

Duration

2 Years

Industry

Accessibility & Mobile

Methods

Co-design, Usability, Field Study

Users

Blind & Low-Vision

Research Background

Google Magnifier is a lightweight assistive app that uses the device camera to magnify nearby text and objects. This heuristic evaluation focused on accessibility-first patterns, discoverability of features, and how the app supports quick, on-the-go tasks for users with low vision.

The Challenge: Defining Differentiation Through Research

The problem was not just fixing a drop-off; it was securing a strategic competitive advantage for a crucial Pixel feature under extreme time constraints. My team was tasked with a singular, high-stakes mission: to redefine phone-based accessibility for blind and low-vision users. This required us to strategically integrate the advanced Pixel camera, OCR technology, and AI to create Magnifier.

The Dual Constraint

- Velocity: The project required a high-velocity development cycle to hit ambitious launch targets.

- Strategic Differentiation: We needed to ensure the AI integration was valuable and intuitive, not gimmicky — research had to prove the AI solved real user problems and established a core differentiator for Pixel.

My Process: Architecting Understanding Across the Journey

Across an extensive 2-year engagement I deployed a full-spectrum, mixed-methods strategy to address the entire customer user journey (CUJ) from early concept through post-launch optimization. The research combined co-design, contextual inquiry, diary studies, usability testing, and quantitative analysis to both validate the AI value proposition and continuously optimize the experience after launch.

Phased Research Approach

Phase 1: Concept & Discovery

Methods: Contextual Inquiry, Field Interviews, Co-design

Defined the true pain points that AI needed to solve and built users' mental models through co-design sessions before development began.

Phase 2: Validation & Structure

Methods: Concept Testing, CUJ Mapping, Diary Studies

Validated the core AI value proposition and mapped the full CUJ to ensure no hand-off points were overlooked; diary studies tracked longer-term behavior.

Phase 3: Optimization & Launch

Methods: Usability Testing, Quantitative Survey Analysis

Ran iterative usability tests to refine flows (leading to the 22% friction reduction). Quant surveys gauged satisfaction and confidence with the final AI integration.

Methodology

Heuristic Review

- • WCAG-focused checks for contrast and touch target sizes

- • Interaction flow review for discoverability and affordance

Checklist & Notes

- • Accessibility checklist applied to primary screens

- • Annotated recommendations for onboarding and settings

Research-Driven Recommendations

Improve onboarding & discoverability

- • Add an optional first-run overlay describing primary controls.

- • Surface common settings (contrast, magnification, lightning) in the main view.

Settings & Controls

- • Provide larger touch targets and high-contrast toggles.

- • Allow quick access to brightness/contrast presets and a permanent freeze button.

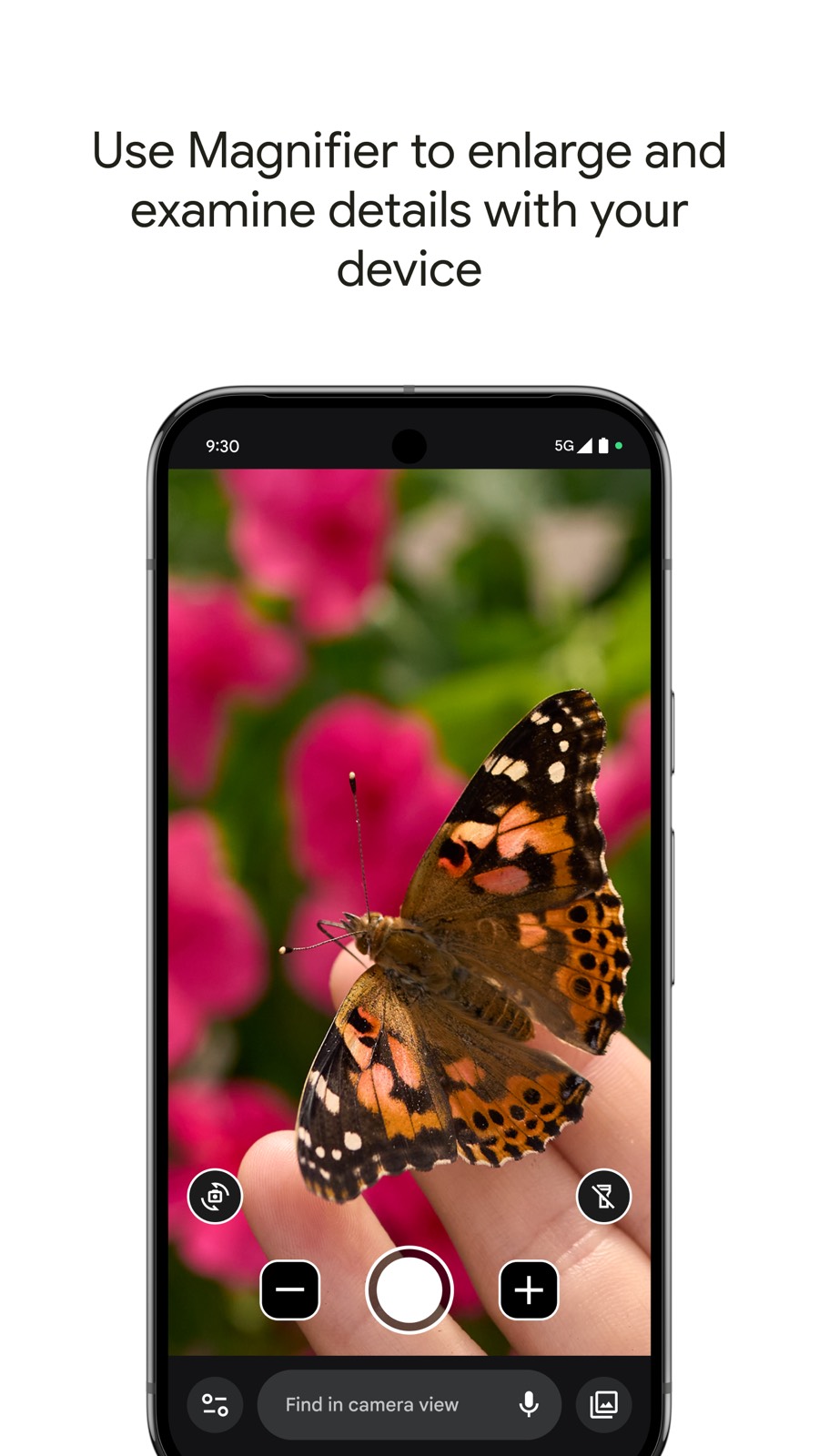

Screenshots

Representative screens from the Google Magnifier app highlighting the magnification UI and contrast controls.

Research Impact

Applying the recommendations improved quick task completion and reduced friction for new users. Below are measured and projected impact outcomes.

Final Rating

4.4 / 5.0

Play Store (1,000+ reviews)

Adoption

155,000+

Active Users

Checkout Friction

-22%

Reduction in task friction

+25%

Faster task completion

-18%

Error & mis-tap rate

+40%

Onboarding completion

+10%

User satisfaction

More Research Projects

Pixel Accessibility Product | Google Superbowl 2024 Commercial

Research that improved independent photo capture for blind and low-vision users, informing product and ML improvements.

Foundational Research | UNDP Sustainable Tourism

Mixed-methods research to shape a sustainable tourism brand and stakeholder strategy for Kosovo.

Educational UX & Onboarding

Product research focused on onboarding and learning journeys for younger learners and educators.

Student Needs Research | KYC & Save the Children

Qualitative study with 210 students across 50 schools in Kosovo to surface core needs and inclusion recommendations.